Keepsake Version control for machine learning

Lightweight, open source

01 Never lose your work

Train as usual, and Keepsake will automatically save your code and weights to Amazon S3 or Google Cloud Storage.

02 Go back in time

Get back the code and weights from any checkpoint if you need to re-train or get the model weights out.

03 Toward reproducibility

It is really hard to run and re-train ML models. We are working to fix that, but we need your help to make it a reality.

04 How it works

Just add two lines of code. You don’t need to change how you work.

Keepsake is a Python library that uploads files and metadata (like hyperparameters) to Amazon S3 or Google Cloud Storage.

You can get the data back out using the command-line interface or a notebook.

import torchimport keepsakedef train(learning_rate, num_epochs):# Save training code and hyperparametersexperiment = keepsake.init(path=".",params={"learning_rate": learning_rate, "num_epochs": num_epochs},)model = Model()for epoch in range(num_epochs):# ... train step ...torch.save(model, "model.pth")# Save model weights and metricsexperiment.checkpoint(path="model.pth",metrics={"loss": loss, "accuracy": accuracy}})

05 Open source & community-built

We’re trying to pull together the ML community so we can build this foundational piece of technology together. Learn more.

06 It's just plain old files on S3

All the data is stored on your own Amazon S3 or Google Cloud Storage as tarballs and JSON files. There’s no server to run. Learn more.

07 Works with everything

Tensorflow, PyTorch, scikit-learn, XGBoost, you name it. It’s just saving files and dictionaries – export however you want.

Throw away your spreadsheet

Your experiments are all in one place, with filter and sort. Because the data’s stored on S3, you can even see experiments that were run on other machines.

$ keepsake ls --filter "val_loss<0.2"EXPERIMENT HOST STATUS BEST CHECKPOINTe510303 10.52.2.23 stopped 49668cb (val_loss=0.1484)9e97e07 10.52.7.11 running 41f0c60 (val_loss=0.1989)

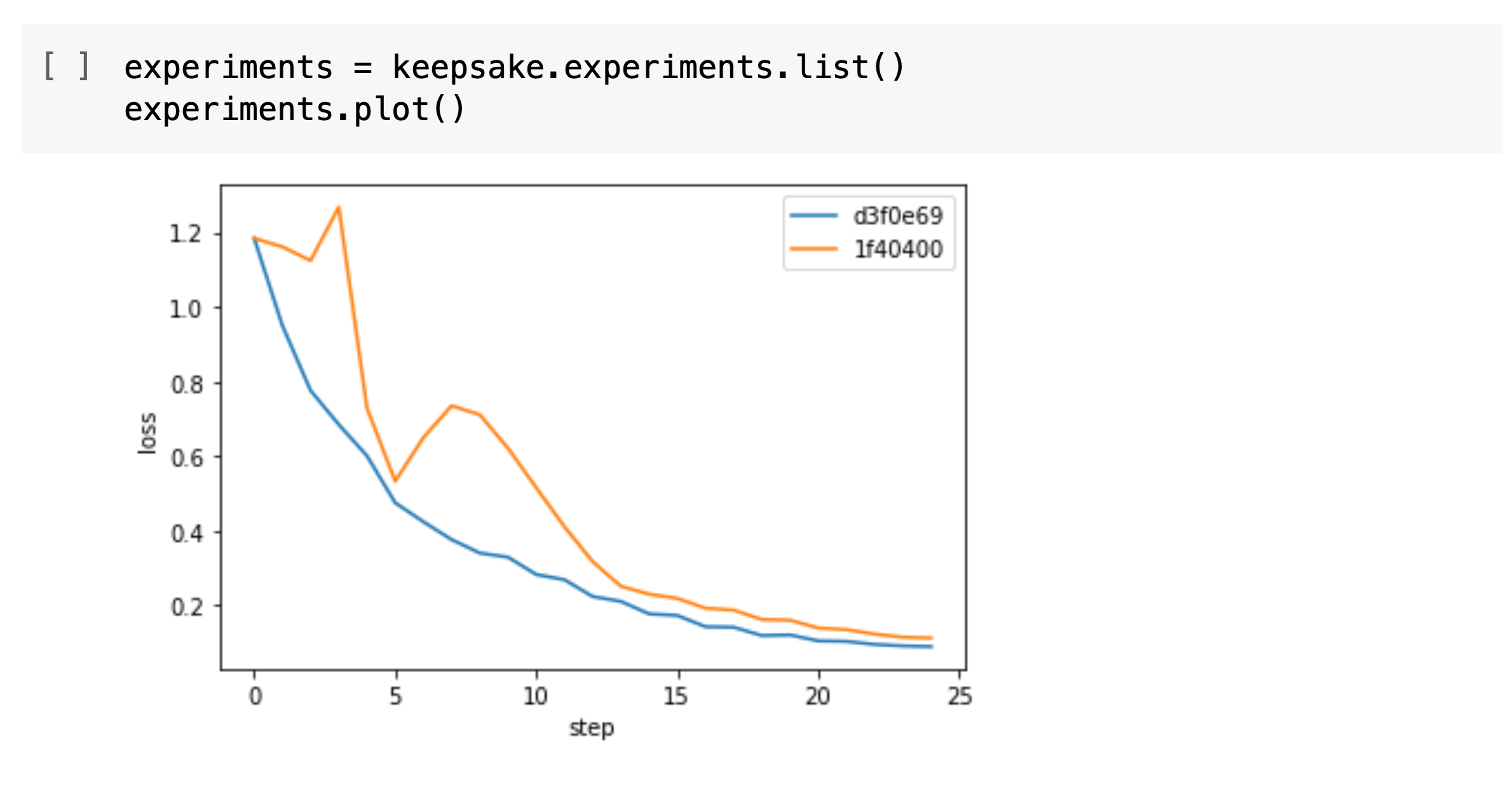

Analyze in a notebook

Don’t like the CLI? No problem. You can get all your experiments out and do meta-analysis on your results from within a notebook. Learn more.

Compare experiments

It diffs everything, all the way down to versions of dependencies, just in case that latest Tensorflow version did something weird.

$ keepsake diff 49668cb 41f0c60Checkpoint: 49668cb 41f0c60Experiment: e510303 9e97e07Paramslearning_rate: 0.001 0.002Python Packagestensorflow: 2.3.0 2.3.1Metricstrain_loss: 0.4626 0.8155train_accuracy: 0.7909 0.7254val_loss: 0.1484 0.1989val_accuracy: 0.9607 0.9411

Get back your code and weights

Keepsake lets you get back to any point you called experiment.checkpoint() so, you can re-train models and get your model weights out.

$ keepsake checkout f81069dCopying code and weights to working directory...If you want to run this experiment again, this is how it was run:python train.py --learning_rate=0.2

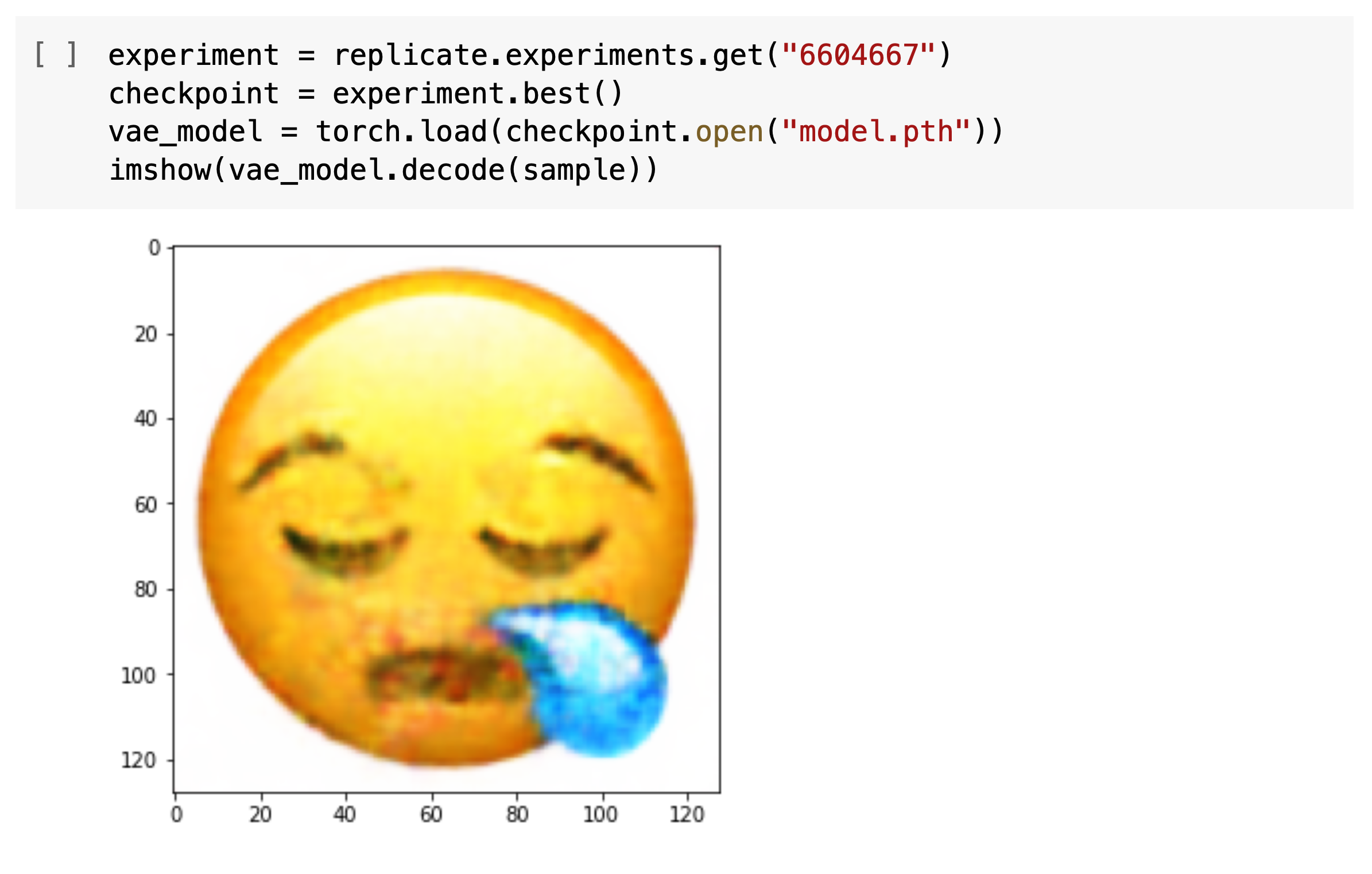

Load models for inference

You can load a specific version of your model in Python to run inferences – either from within a notebook or from another program running inferences.

A platform to build upon

Keepsake is intentionally lightweight and doesn’t try to do too much. Instead, we give you Python and command-line APIs so you can integrate it with your own tools and workflow.